Ambient occlusion, of course, is essential to modern 3D rendering. It adds "life" or "atmosphere" to 3D models in environments, and once you've seen anything with it, you'll never settle for anything less.

On

The Chronicles of Riddick we did our first ambient occlusion rendering, and while it worked fine, it was very slow to compute. So slow, in fact, that we did ambient occlusion as a pre-process on the model, and saved the model with the occlusion baked in. Fortunately, we were dealing with almost-rigid spaceships and props most of the time, and this worked fine.

I noticed that all commercial renderers now support ambient occlusion to some extent. I've done some testing with the Gelato beta, and RenderMan 11.5 (both of course obsolete now.) We also did had an overly aggressive animator take home a shot and do the ambient occlusion on his home network using the Brazil renderer. My experience is that these obsolete versions of Gelato and RenderMan worked OK, but had significant artifacts and were pretty slow -- whereas the Brazil AO was amazingly fast and seemed artifact free, but it doesn't run on Linux (yet!) Brazil's AO seemed so fast that I could hardly believe it, they must be doing some amazing acceleration. I couldn't figure it out -- if you think about ambient occlusion, you realize that the close polygons are much more important than the far away ones -- the big ones are more important than the little ones -- and there has to be some way of coming up with an approximate solution quickly.

We also thought about using hardware rendering to accelerate ambient occlusion. We hired this spectacular programmer Hal Bertram to experiment with this, he did it in an afternoon by rendering the scene with the light in 256 different directions, and averaging these images together. Unfortunately, hardware (at the time, this was almost two years ago) was still pretty limited, and there were serious artifacts (aliasing, limited Z resolution, banding...) but it was super fast (a few seconds a frame.)

Finally I read of a nice acceleration for AO that is part of the GPU Gems book. As with many things in that book, it's a technique that applies perfectly well to traditional computers too -- although it would be slower on traditional computers (and perhaps that delta will increase over time as GPUs have been increasing in speed somewhat faster than CPUs) At this point, the article is available online at

http://download.nvidia.com/developer/GPU_Gems_2/GPU_Gems2_ch14.pdfalthough you can always just go buy the book. The trick in this article is to represent each vertex as a disk, and calculate how much each disk shadows each other disk -- independently! -- and add them up. This is quick and easy, but it's still an O(n

2) algorithm.

The acceleration comes from building a spatial tree. At each level of the tree, you have a list of disks in that part of the tree, plus some kind of approximation of all the disks from there on down. When calculating the occlusion for a particular vertex, you note how much shadow a particular subtree might contribute, and if it's less than a tolerance parameter, you use the approximate model.

The article in the book is just about that vague, leaving a lot of room for interpretation.

For my tree, I used a kd tree. The root of the tree contains the whole model, and the bounding box is divided into two subtrees by a median -- that is, half the discs are on one side of the plane, half on the other. I choose the axis of the plane (x, y, or z) to give the largest smallest extent. That is, if I have a box that has dimensions (10, 5, 3) and the median in each dimension is at (3, 2, 1) (say), then the x dimension would be partitioned into two parts 3 and 7 units wide, the y dimension into two parts 2 and 3 wide, and so on. The x dimension has the largest smaller half (3), so that cell is split in the x dimension. I think that probably any reasonable partition scheme would give almost identical results, but I thought that this scheme would yield somewhat-cubical boxes with equal numbers of discs reasonable quickly as you go down the tree.

At each level, I note the "large" discs, ones that cover a large part of the cell. As you traverse the tree, you have to evaluate the occlusion immediately for the large discs. These large discs are not included in the subtrees.

Finally, my approximation is that each node has an array of 24 structures. Imagine a cube, blow it up into a sphere, and imagine four equally-spaced normals emitted from each face. In each node in my kdtree, there are 24 entries containing the sum of the areas of the discs that correspond most closely to one of these normals, and the average position of the center of these discs.

At some point, when traversing the tree, you decide that you can use the approximate value, you build proxy discs from the array, and then you don't have to traverse the subtrees below that node. Here's my tree cell structure, just to help explain what's going on.

typedef struct KDtree {

BOX3F box; // bounding box of this cell

struct KDtree *child0; // child trees -- dividing plane immaterial

struct KDtree *child1;

int n_vert; // number of vertices at this level

OBJ_VERT **vert; // OBJ_VERT contains position, normal, area

float area[24]; // sum of areas of disks

V3F avg_pos[24]; // average position of disks with this normal

float total_area; // sum of area of all discs in subtree

} KDtree;

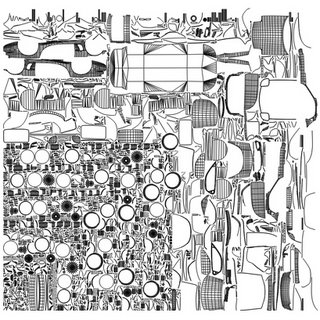

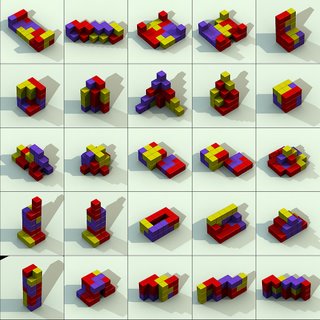

This all works great. On the 50,000 polygon model shown, I can calculate the ambient occlusion in less than 10 seconds with a simple 500 line program, with very good looking results. This compares to something like 10 minutes for my previous, heavily optimized, ray-tracing technique. Big win. If I spend some more time optimizing, I'll bet I can cut it down to 5 seconds.

One further note -- in the original GPU Gems 2 article, they recommend a very odd form factor calculation for the shadows

1 - (r * cos(thetae) * max(1, 4 * cos(thetar)) / sqrt(areae/pi + r2)

but later, when discussion radiance transfer, they use the more standard form. I use an equation similar to their radiance transfer equation

1 - (cos(thetae) * cos(thetar) * areae/(pi * r2))

I have to believe that the funky equation is designed to take into account some artifact of their approximation paradigm.